Month: June 2022

Human Action Recognition with Deep Learning and Computer Vision

image source; freepic.com

Here is an overview of the Deep-Learning-based Human Action Recognition problem statement. This overview presents some computer vision solutions to this problem and introduces relevant datasets to HAR.

If you are interested in this topic, you can also read:

Building an Automatic Fall Detection System using computer vision.

Guide to Data Labeling Methods, Challenges, and Solutions

Find our relevant works on this subject on the Blogs and Solutions pages.

1. Introduction

After working on several Computer Vision (CV) problems, such as object detection and human pose estimation, the Galliot team has decided to apply those techniques to Human Action Recognition (HAR). The purpose of this article is to discuss the definition of HAR, its applications, and different approaches used to solve the problem using Computer Vision and Deep Learning techniques.

Disclaimer

This article is not a step-by-step guide to Human Action Recognition and does not include any code implementation. Here is just a review of this field and various approaches to building a HAR system.

2. Deep Learning-Based HAR Problem Statement

The adoption of digital devices and digital record-keeping (digitalization) has made building robust Artificial Intelligence systems possible in the current era. So, automatic understanding of the actions on video footage became very popular in this domain. This understanding includes finding objects in a scene, classifying videos, and predicting human activities. The latter is called “Human Action Recognition” in the AI and machine learning literature. This task is concerned with identifying people’s specific movements or actions in the video frames. For example, we can employ such systems in a factory to measure the safety and performance of the workers and the whole system to detect malfunctions. These systems detect workers’ actions, such as working with tools, talking on the phone, sitting, lying, etc.

So in simple words, the HAR systems get a chunk of the video footage and detect the activities of the individuals present in the video. As you may have noticed, it can be formulated as a video classification problem in machine learning.

Temporal Relation in HAR

Solving this problem with image classification methods may decrease the accuracy. In image classification, we classify images using the semantics and features of only one image. However, for identifying an action, we need to consider the activities performed in a sequence of frames in the videos as well, i.e., the temporal relation of frames is important.

Imagine an action like sitting down on a chair; if we do not consider the temporal relation of frames in this action, our model might recognize it as standing-up action. For example, if we do not know the order of frames in Figure 1 or make our decision using only one frame, we would probably end up detecting the wrong action.

3. Human Action Recognition Applications

The availability of high-performance processors (GPUs & TPUs) and edge devices that can handle massive real-time calculations on images and videos made HAR a hot topic in the Computer Vision / Deep Learning community.

Human Action Recognition is being used in a wide range of applications, from healthcare to understanding visual information for security and surveillance. It is worth mentioning people’s interest in interacting with computers using body gestures. This interest leads to designing human-computer interaction applications. Controlling the presentation of slides using hand movements is just a simple example of using human action recognition here. Another example of HAR applications includes extracting information from videos for various purposes like monitoring and surveillance. This could be monitoring workers in the industry or preserving public security, where crowds’ movements are tracked to detect crime or violence in different situations, e,g, detecting robbery.

Other important applications of HAR systems fall into the healthcare category. These systems significantly improve healthcare and well-being services in various scenarios. They make automatic monitoring of vulnerable people possible, such as Automatic Fall Detection systems.

If you want to read more about Automatic Fall Detection systems based on Computer Vision techniques, you can refer to Galliot’s article on this subject.

The HAR systems are also used for other tasks like sign language translation, interpretation of an individual body’s situation, Active and Assisted Living (AAL) systems for smart homes, and Tele-Immersion (TI) applications.

4. Datasets

With the emergence of Deep Learning and its numerous applications in Computer Vision, there have been several attempts to solve the HAR problem using Deep Learning techniques. Accordingly, different datasets are gathered, so we can use them for training Deep Learning models for recognizing human actions. In the following, we try to introduce some of the most practical datasets in this field.

4.1. DeepMind Kinetics (Versions: 400, 600, 700):

This dataset is a collection of URL links from youtube videos of around 10 seconds in length. Each video consists of a single human-annotated action class for that 10 seconds. The version number (400/600/700) shows the number of action classes that each dataset covers. Moreover, each version contains at least 400/600/700 video clips for each action class. In other words, version 400 offers at least 400 video clips for each class, and in 600 and 700 versions, respectively, contain 600 and 700 videos. The actions are human-focused and can be divided into two categories: human-object interactions (like playing guitar) and human-human interactions (like handshaking).

You can find the related papers and download the datasets on this DeepMind Kinetics web page.

4.2. Something Something:

Qualcomm Technologies has published this dataset in two versions (V1 & V2), which V1 is outdated and no longer available. The Something-Something dataset V2 consists of more than 220K annotated videos specially designed for training machine learning models that can understand human hand gestures in basic everyday actions. The name of the dataset (Something-Something) also refers to the type of these actions; for example: moving something up/down, putting something on something, tearing something, etc.

4.3. Epic Kitchens:

Epic Kitchens dataset consists of 100 hours of Full HD recording with 90K action segments collected from 45 kitchens in 4 cities. It includes 97 verb classes and 300 noun classes. The Videos are captured by a head-mounted camera and in first-person view. They cover everyday activities in the kitchen with non-scripted recordings in native environments. You can watch the Epic Kitchens video demonstration in this youtube video.

4.4. UCF-101 Action Recognition Dataset:

This is a HAR dataset of realistic action videos collected from Youtube. The dataset contains 13320 videos from 101 action categories in 25 groups; each group consists of at least four videos of an action. There are five main action categories in this dataset: 1) Human-Object Interaction, 2) Body-Motion Only, 3) Human-Human Interaction, 4) Playing Musical Instruments, and 5) Sports. Here you can read more about UCF101 and find its action categories.

5. Deep Learning Approaches to HAR

Researchers have made lots of efforts to leverage various approaches to Human Action Recognition in the past decade. These approaches mainly include Radio Frequency-Based,

Sensor-Based, Wearable Device-Based, and Vision-Based. Among these, vision-based approaches have become very popular because of the abundance of video-capturing devices and the availability of powerful processors. However, the diversity of human actions makes HAR a challenging task for vision-based methods.

Many Computer Vision solutions have been proposed to solve the Human Action Recognition problem in the past years. But, they mostly failed to handle the extensive video sequence of cameras for surveillance systems. Recently, the advancement of deep learning and computer vision systems has shown promising results in the HAR problem. Most of these systems benefit from Convolutional Neural Networks (CNN) and Recurrent Neural Networks in their architectures.

Due to our background, in this post, we focus on the Deep Learning/Computer Vision-based approaches that address the HAR problem. In the following sections, we have tried to introduce some of these deep learning-based approaches to the HAR problem.

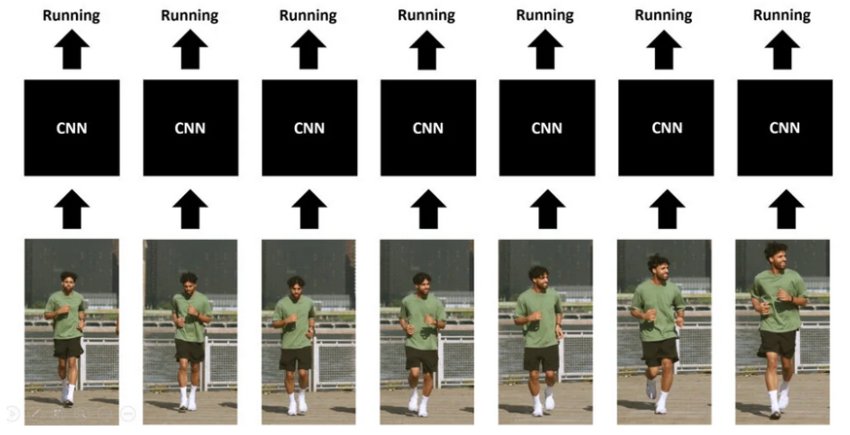

5.1 Single-Frame CNN:

This might be the most straightforward approach to recognizing human actions. Here, instead of feeding our model with the sequence of frames (that preserves the temporal relation of images), we assume each frame is an independent image. Doing this somehow changes our problem from Human Action Recognition to a simple Image Classification. Then, we send these separate images (frames) to a simple image classifier and average all the individual probabilities to get our final output.

As we said, this is a simple approach that also lets us use many pre-trained image classification models for fast training. For instance, we can use an image classifier like EfficientNet or ResNet. On the other hand, this method does not consider the temporal relation of the frames, which we mentioned earlier.

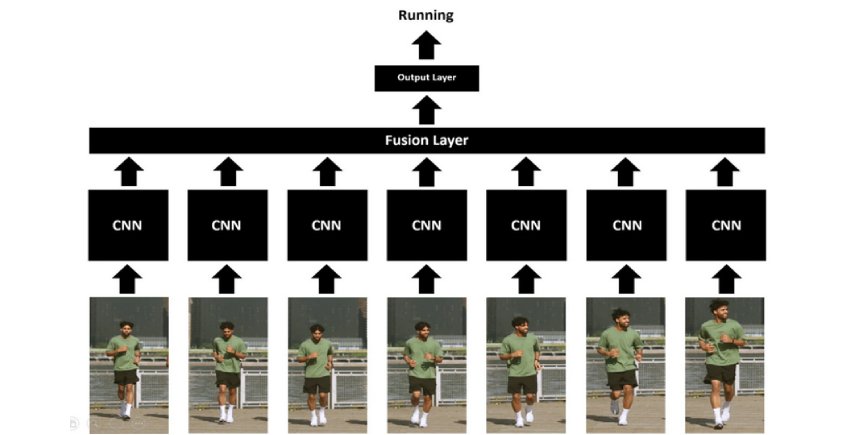

5.2. Late-Fusion CNN:

Other than a slight complication in the model’s averaging / fusion layer, Late-Fusion is practically similar to the Single-Frame CNN approach. In the previous method, we tried to input separate images into our model and get labeled classes for each frame. Then we performed a naïve averaging process across all the labels after the network had finished its work. However, in the Late-Fusion approach, this Fusion layer is built into the network and performs the averaging (or any other learnable fusion technique) before reaching the output layer. So, this built-in layer can take the temporal relation of frame sequences into account.

In the Late-Fusion approach, we first extract a feature vector for each frame of our sequence. These vectors represent the particular spatial features of each frame. Then, we prepare and send a feature map of the images to the Fusion Layer of our network. So, this trainable layer can merge the outputs of previous layers and send the result to the output layer for decision-making.

A Fusion layer is, in fact, responsible for merging the output of several separate networks that operate on distant frames. Here, each CNN performs an image classification task on one image of our frame sequence. And in the end, the networks’ predictions are merged using the fusion layer. The fusion layer can be implemented using various techniques, yet max pooling, average pooling, and flattening are the most commonly used techniques.

Temporal Difference Networks (TDN) and Temporal Segment Networks (TSN) are two of the common methods for implementing late fusion solutions in human action recognition.

5.2.1. TDN (Temporal Difference Networks)

TDN is one of the late fusion-based methods for finding the relation of distant frames in a sequence. The valuable contribution of TDN is introducing a highly complex fusion layer that can detect both short-term and long-term dependencies between the frames very well. TDN implements CNNs and Motion detection techniques to do this fantastic work. It first uses a Convolutional Neural Network to extract each image’s special features. Then, using Motion Information, it understands a higher level of relationship between the frames.

5.2.2. TSN (Temporal Segment Networks):

TSN is another late-fusion-based method that aims to model long-range temporal structures in action recognition problems. This method supplements the network by adding some other modalities instead of only using RGB images as the model’s input. For example, TSN adds Optical Flow to the first CNN layers to find the frames’ temporal relationships in an early stage in the network. Finally, the probabilities of all these modalities are fused to reach the final prediction. Their paper also investigates the various fusion layers (aggregation modules) for improving the model’s performance. This investigation nicely discusses the following aggregation modules (as the fusion layers) and presents the results for comparison: – Max Pooling, – Average Pooling, – Top-K Pooling, – Weighted Average, and – Attention Weighting.

Frame Sampling in Late Fusion

Imagine a 30 fps video clip; each minute of this video consists of 1800 frames that impose high computational costs on our network for analysis. So, how do these methods handle this issue?

These methods do not densely feed the network with all of the frames. They divide the video sequences into N segments. Each of these segments includes the same number of frames that are representative of the whole video. They will then randomly select one of the frames from each segment and feed them to the network for performing the desired video analytics task.

So, in our 30 fps video clip example, if we put 10 frames in each of our segments, we will have 180 segments for each minute of that video (N = 180). Then instead of feeding our network with 1800 frames (for each minute) to our network, we input 180 frames.

5.3. Early-Fusion CNN:

Opposite of the late fusion approaches, in this method, we fuse the frames at the beginning of our deep neural network. There are various ways to do this work. One of them is stacking RGB frames in a new temporal dimension. This creates a four-dimensional tensor of the shape (T x 3 x H x W) in which “T” is the temporal dimension. In other words, “T” is the number of frames we put in our sequence segments.

Back to the previous example where we divided our frame sequence into 180 segments consisting of 10 frames, the “T” will equal ten (T = 10).

Moreover, in this tensor, 3 stands for three RGB channels, and H & W are spatial dimensions of Height and Width. After the fusion, this (T x 3 x H x W) tensor turns into a tensor of (3T x H x W) shape. Stacking these frames together and feeding them to the network enables our CNNs to learn and identify the temporal relation of the frames at the first layer.

Here is a summary of the Pros and Cons of this approach:

Pros:

– The Temporal Relation is applied at the beginning.

– The Accuracy is higher.

Cons:

– Modules should be customized.

– Slower Training phase (performance).

Below, we will introduce two early-fusion methods that significantly help solve the action recognition problem.

5.3.1. SlowFast networks:

The SlowFast network is designed for video understanding, and thus it is suitable for action recognition problems. The intuition behind this work is straightforward: the authors observed that not all parts in a video participate in the motion when an action is performed. For example, when a person is walking, this does not change the semantic features of a person. I.e., it can be categorized as a person whether he is walking or doing something else. Based on this intuition, they have developed the SlowFast network, which has two pathways: 1) the Slow pathway is for capturing the semantics of an object, and 2) the Fast pathway is for capturing the motions. Both pathways use 3D ResNet and 3D convolution operations. So, we can categorize the SlowFast network as an Early-Fusion approach.

There are lateral connections from the fast to the slow pathway to combine motion information with semantic information. This cross-connection improves the model’s performance. They used a global average pooling layer at the end of each pathway. This layer concatenates the results from both pathways and reduces dimensionality. Finally, a fully connected layer with the softmax function classifies the action in the input video clip.

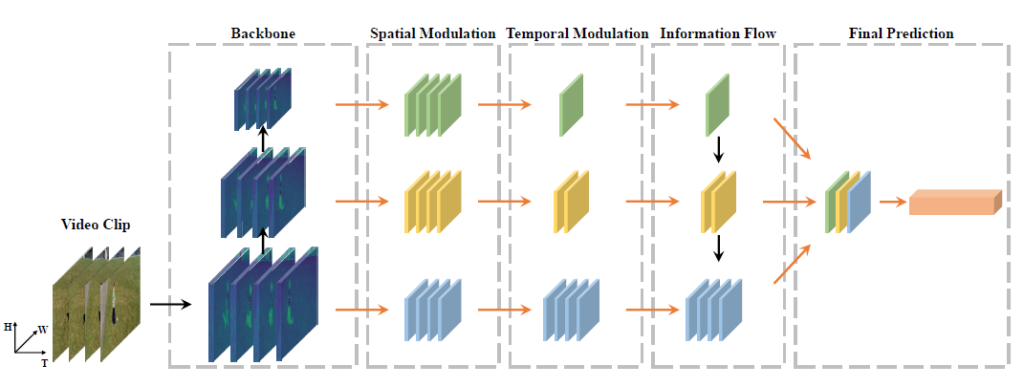

5.3.2. TPN (Temporal Pyramid Network)

Each person acts at his or her visual tempo due to various factors such as energy level, illness, age, etc. TPN is an Early-Fusion-based method that can model these visual tempos along with a temporal fusion approach.

The significance of TPN design is locating two modulation blocks before aggregating the features; the Spatial modulation and the Temporal modulation. TPN uses ResNet, which is a pyramidal network, as its backbone. So, we have a set of hierarchical features after the backbone block.

The spatial modulation updates the features by spatially downsampling with different strides for each level. In this way, our network has the spatial semantics of the video clip. On the other side, the temporal modulation block will downsample the features along the temporal dimension by a different factor for each level. Therefore, we will have different temporal dimensions for different-level features. As a result of combining these features, it’s as if we viewed the action at different speeds.

The following table briefly compares the explained approaches to the human action recognition problem.

| Network | Model | License | Date | Dataset | GitHub Repo | Approach |

|---|---|---|---|---|---|---|

| Slow-Fast | Yes | Apache-2.0 | Oct. 2019 | Kinetics-400 | Yes Link | Early Fusion |

| TPN | Yes | Apache-2.0 | Jun. 2020 | Kinetics-400 Sth-Sth(V1,V2) Epic Kitchen | Yes Link | Early Fusion |

| TDN | Yes | Apache-2.0 | Apr. 2021 | Kinetics-400 Sth-Sth(V1,V2) | Yes Link | Late Fusion |

| TSN | Yes | Apache-2.0 | May 2017 | UCF-101 | Yes Link | Late Fusion |

Please feel free to send your comments and ask your questions about this topic. We would be happy to hear your suggestion to improve ourselves.

Coming Soon in Galliot

In our future releases, we will talk about some practical Machine Learning topics such as Data Labeling and Experiment Tracking. These are the subjects we struggle with in our daily work and can be helpful for AI teams.