Adaptive Computer Vision Engine

Problem Statement:

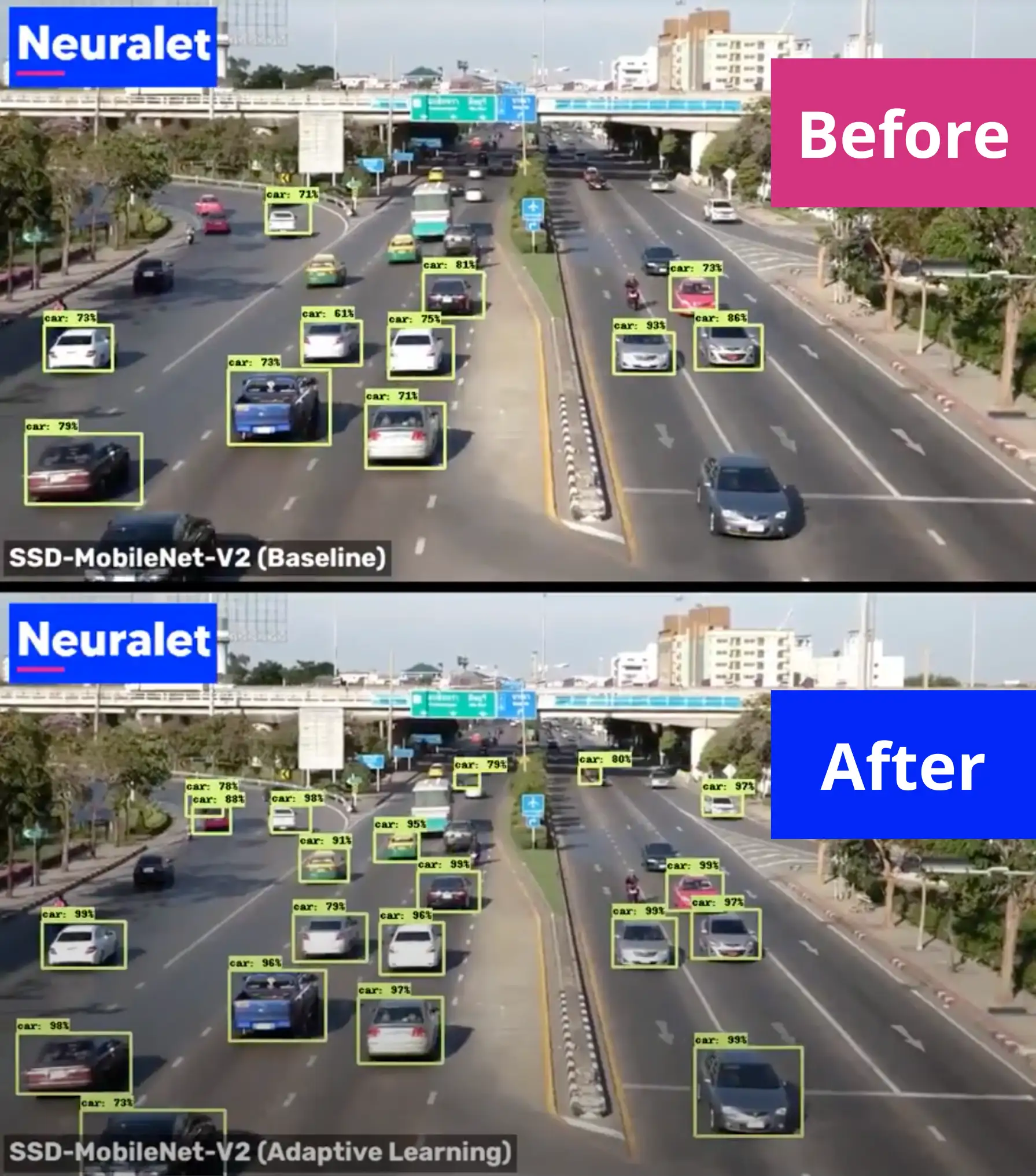

To use deep learning computer vision methods on mobile and edge devices, you are limited to lightweight networks that have high-speed and low computational costs. However, these models tend to have lower accuracy and generalization in unseen environments compared to larger and more computationally heavy models. In order to achieve more accurate results using the lightweight models, you will need to create a new dataset and label from your own environment/problem to train the model on your dataset. This is usually a slow process and hard to scale in scenarios where you have to repeat this process many times for different problems and environments.

Our Solution

Our Adaptive Learning Service is designed to customize the object detection models to the provided datasets and specified environments. Considering constraints such as the lack of enough training resources (e.g., GPU machines) or required technical knowledge to tune the parameters correctly, we provide a service that eliminates the complexities and automatically carries out the configurations. Our API service provides the training machines, so that you do not need any further training resources.

Through our API service, users can send their videos, specify their requirements, and request for adapting the model on their dataset. After the training process, users will be able to download the trained student model.

Our API service is FREE for now; you can contact us for more information and ask for a demo through hello@galliot.us.

See the API tutorial from here and read more about the Adaptive Learning approach from here

Get Started

Have a question? Send us a message and we will respond as soon as possible.