NVIDIA DeepStream Python Bindings; Customize your Applications

Original image by Nvidia

This part of the Galliot DeepStream articles introduces the most important DeepStream elements, plugins, and functions. Alongside this, we show you how to build a Pipeline and customize your video analytics applications.

This is the second part of our DeepStream SDK article series. You can find the other parts here:

Part 1: Using NVIDIA DeepStream to deploy Galliot Adaptive Object Detection on Jetsons and X86s.

Part 3: NVIDIA DeepStream Example; Building a Face Anonymizer using Python Bindings.

Building robust vision applications requires high-quality labeled data:

The Galliot article on Data Labeling Methodology and Solutions helps you make better data.

When it comes to building computer vision models for real-time applications or video analytics solutions, you should have heard of or worked with NVIDIA DeepStream. DeepStream is an AI video analytics framework using GStreamer’s architecture. This framework uses streaming data as input and employs computer vision to gain insight from pixels. DeepStream is capable of real-time video processing both on edge devices and cloud servers. Check this article to see how we deployed our Adaptive Learning model on X86s and Jetson devices using DeepStream as a use case.

DeepStream supports Python through Python bindings for customizing and adding new features to your applications. Galliot has deployed several applications on DeepStream and customized them using Python bindings. This article tries to simply explain the DeepStream Pipeline, some basic concepts, and its most important building blocks, such as Elements, Pads, Buffers, and Probes.

Before You Read this Article:

If you are trying to find out how NVIDIA DeepStream and its core functions work, this article helps you customize these functions on your applications. But, in case you want to get familiar with DeepStream SDK and looking for a general overview of it, you can refer to this NVIDIA Developer page. For detailed information on DeepStream functions, modules, and classes, we refer you to the NVIDIA documentation.

1. The DeepStream/GStreamer Architecture

To simplify DeepStream concepts, we have used an analogy in the following paragraphs. Skipping these sections will not harm your understanding of the main discussion.

The Analogy

Imagine an automatic car wash that consists of several cabins, each for doing a specific task. For example, the first cabin sprinkles soapy water, the second one brushes, the third one rinses, and the last one dries the car. However, these stages can vary based on the service you choose, e.g., you can have a cabin for waxing the car. There are several sensors implemented inside the car wash machine. These sensors detect the car in the cabins and activate the process at each step. At the end of each cycle, a conveyor moves the car through a gate to the next cabin. In the end, the clean car is sent back to the street.

In the following sections, we are going through the steps of building an actual DeepStream pipeline. We will use our Object Detection app as an example to help better show the DeepStream Python bindings architecture. You can open the deepstream_ssd_parser.py file to follow the sections and see how it runs the object detection pipeline.

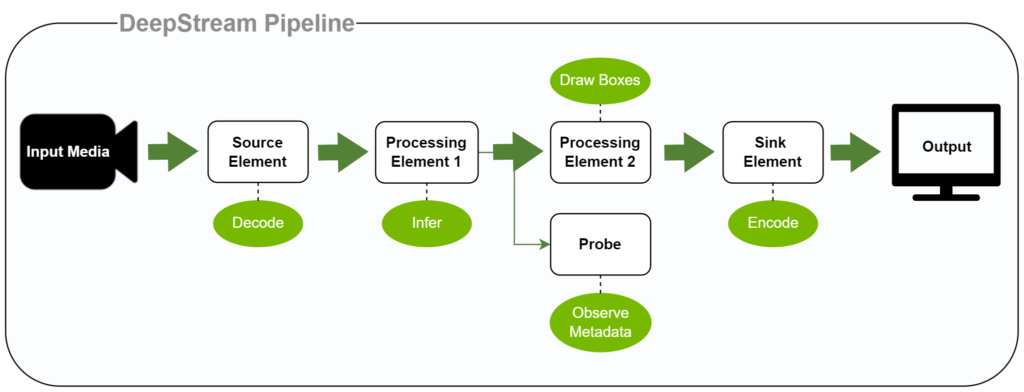

2. DeepStream Pipeline and Elements

DeepStream is built on top of GStreamer. A GStreamer application comprises a pipeline that connects various elements to load a source video, process it, and output the result. The following code line creates the GStreamer pipeline of your application.

pipeline = Gst.Pipeline() (line 389)

After creating a pipeline, you should add the Gst-elements to it. Gst-elements are processing blocks that each perform a particular task in the pipeline. In GStreamer, there are many types of elements that can be categorized into three types:

1- Source Elements

2- Processing Elements (Filters)

3- Sink Elements

As a general rule, Source elements produce data. Filters are the processing units in the pipeline. Each Filter is responsible for performing a process on its input data to provide our desired output. Finally, Sink elements accept data and are used to implement the output media as the endpoint of the pipeline.

1- Source Elements:

These elements load the input media from an encoded video file such as MP4 or a streaming server (RRSP server). Then it feeds the data to the next elements of the pipeline (Processing elements).

By creating a source element, you can specify the characteristics of the input; for instance, the URI of a file, video streaming source, etc.

source = create_source_bin(0, args.input_video) (line 395)

You can read the details of the create_source_bin function for more information (line 334).

2- Processing Elements:

Working on your video analytics application, you usually need to apply some processing on the input video, such as filtering or drawing layouts. DeepStream/GStreamer provides lots of processing elements like decoding/encoding elements, visual filters, video converters, etc.

pgie = make_elm_or_print_err("nvinfer", "primary-inference", "Nvinferserver") (Line 408)

DeepStream has added various elements to the previous Gst-elements to help employ Deep Learning and Computer Vision models in the pipeline. You can see other types of Processing elements, such as batching element (streammux), displaying element (nvosd), etc., in deepstream_ssd_parser.py.

Also, we recommend you read the make_elm_or_print_err (line 64) function, which is an abstraction for making different types of elements.

3- Sink Elements:

After processing your media, you should either store the output on a file or stream it. The Sink elements handle these functions for you.

sink = make_elm_or_print_err("filesink", "filesink", "Sink") (line 444)

Notes:

– As you can see in the code, each element can have some properties that change its behavior. You can set or change these properties by calling the set_property method on them.

– After creating the desired elements, you should add them to the pipeline and link them to each other by calling pipeline.add and element.link methods.

The Analogy

In our carwash example, we can consider the car wash tunnel as one of the GStreamer pipelines and each cabin as a Gst-element. The first cabin that checks in the car would be our Source element. Various cabins, each carrying out a specific task on the car, including spraying soapy water and brushing, can be assumed to be the Processing elements. The cabin that sends the clean car back to the street is similar to the Sink element.

3. DeepStream Pads

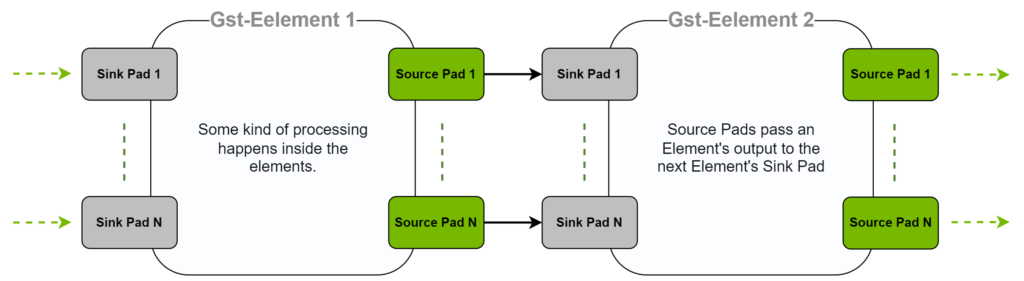

The Gst-elements are linked to each other via “Pads.” These Pads are the elements’ interface to the outside world. There are two types of Pads in each element: 1. Sink Pad, which can get data from previous elements, and 2. Source Pad that passes an Element’s output to the next one. When you link two elements, you implicitly connect the Source Pad of one element to the Sink Pad of the next one.

The Analogy

To simplify the concept, you can think of our car wash cabins. Cabins have an entrance (input) and an exit (output). The entrance gate (Sink Pad) receives the car from the previous cabin (element), and the exit gate (Source Pad) sends it on to the next cabin.

4. DeepStream Buffer & Metadata

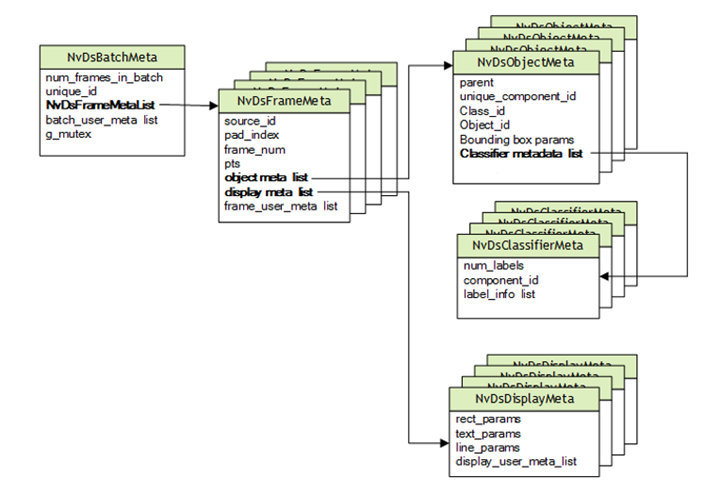

GStreamer uses a concept called “Gst-Buffer” to transfer the metadata between different elements of a pipeline. Buffers contain the metadata that will flow through the pipeline. This metadata includes various information such as frame number, batch size, neural network’s output tensor, etc. Each element can attach “Metadata” to the Gst-buffer, and other elements can retrieve this metadata. DeepStream uses a hierarchical and extensible standard structure for the metadata.

As you can see in figure 3, the metadata flow starts with Batch-level Metadata (NvDsBatchMeta), created by the batching element (“streammux”). The most important metadata inside the NvDsBatchMeta is a list that has the metadata of the batch frames (NvDsFrameMetaList). By iterating over this list, you can retrieve each frame’s metadata. Inside the Frame-level, an important object is User metadata which stores the output tensor of the object detector model. You can parse and manipulate this tensor and store a post-processed version in Object Meta (next section). Another important object in frame-level metadata is Display Meta. It stores the visualization information of a frame. For example, if you want to add text on top of the frame or change the visualization of bounding boxes, you should attach them to display metadata.

5. DeepStream Probes

The metadata on a Gst-Buffer can be monitored with Probes along the pipeline. In simple words, you can access and manipulate an element’s input or output metadata by registering a probe on the sink pad or source pad of the element. In DeepStream Python bindings, Probes are implemented using Python functions. For example, we want to post-process the neural network’s raw output in the object detection sample and create meaningful bounding boxes. We do this by registering a Probe on the source pad of the inference element (pgie).

pgiesrcpad = pgie.get_static_pad("src") (line 504)

In this line, we get the source pad of the inference element. Then we register the pgie_src_pad_buffer_probe Python function. It transforms the raw output of a neural network into a meaningful result and adds it to the metadata (line 228). If you want to apply any post-processing to your model’s output, you should register such a probe to the inference element’s source pad.

On the other hand, assume you want to change the visualization of the app by manipulating the Display metadata. Then you should register a probe to the Sink Pad of the display element. In our object detection example, you can see that first, we get the sink pad of the nvosd element:

osdsinkpad = nvosd.get_static_pad("sink") (line 514)

Then a function (probe) named osd_sink_pad_buffer_probe

Read More

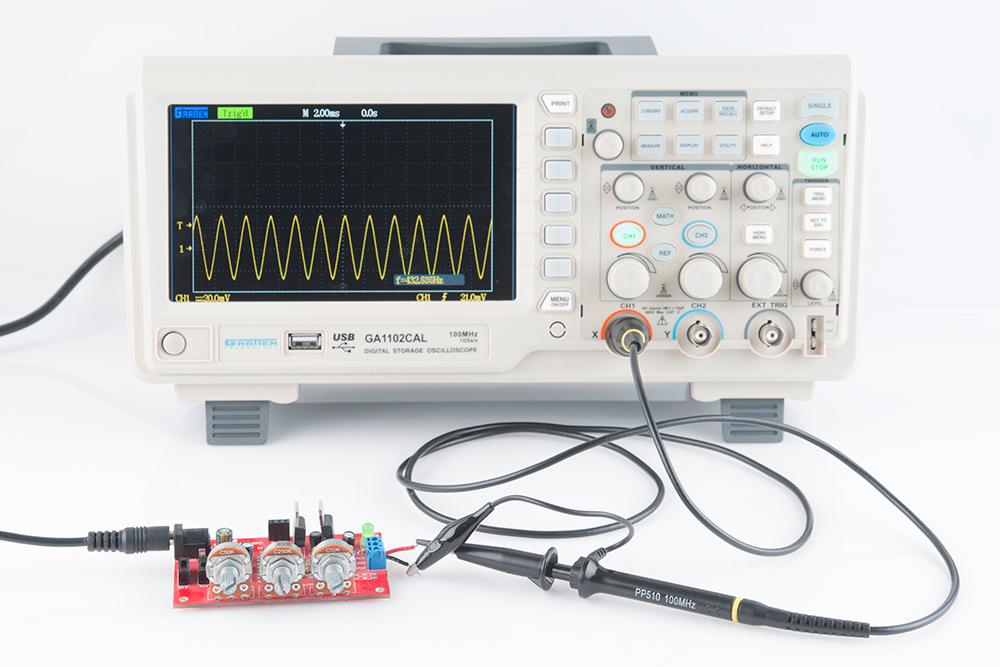

The GStreamer probes somehow work like an electronic device called Test Probe. Test probes come in various types and functionalities. The specific ones are voltage probes, oscilloscope probes, and current probes. These electronic devices are used for measuring multiple units, such as the volt or amp, or displaying the instantaneous waveform of varying electrical quantities. Test probes usually get these data when connected to the measuring field. If you are interested, you can refer to Test Probe – Wikipedia page for more details.

6. Conclusion

DeepStream is built on top of the open-source GStreamer framework optimized for building AI-powered applications. NVIDIA DeepStream is written natively in C/C++, but it also supports Python-based application development. We attempted to demonstrate DeepStream Python binding in this article. For creating a video analytics pipeline, we introduced some basic concepts and objects of GStreamer/DeepStream. We discussed various GST elements, Pads, Probes, Buffers, and Metadata. By using our object detection application as a sample, we demonstrated how to build a pipeline and use Gst-elements. Additionally, we have listed some DeepStream functions and methods to customize your application and add features such as bounding boxes and texts.

In the next article, we will look at a real-world use case for the DeepStream pipeline and its Python plugins. Do you have any suggestions for our future articles? Let us know what you have in mind.

Galliot’s team is constantly working to share its experience and simplify AI development for real-world applications. Do not hesitate to send us your comments and help us improve our content. If you have further questions or would like to work with us, please contact us via hello@galliot.us or the contact us form.

Further Readings

1- Adding a Logo to the DeepStream application

It is a tutorial to make our own DeepStream applications which include a centered transparent logo.

2- A brief overview of DeepStream elements

The article provides a simple guide to using Nvidia DeepStream. It covers the basics of DeepStream, including how it works and how to get started with it.

3- Adaptive Learning Deployment with NVIDIA DeepStream

Deploying Galliot Adaptive Learning object Detection Model on X86s and Jetson devices using NVIDIA DeepStream and Triton Inference Server.

Update

The “DeepStream Python Bindings Example” article is now available on Galliot blogs.

Leave us a comment

Comments

Get Started

Have a question? Send us a message and we will respond as soon as possible.

Great post. I appreciate you explaining in such detail and I’m looking forward to more articles.