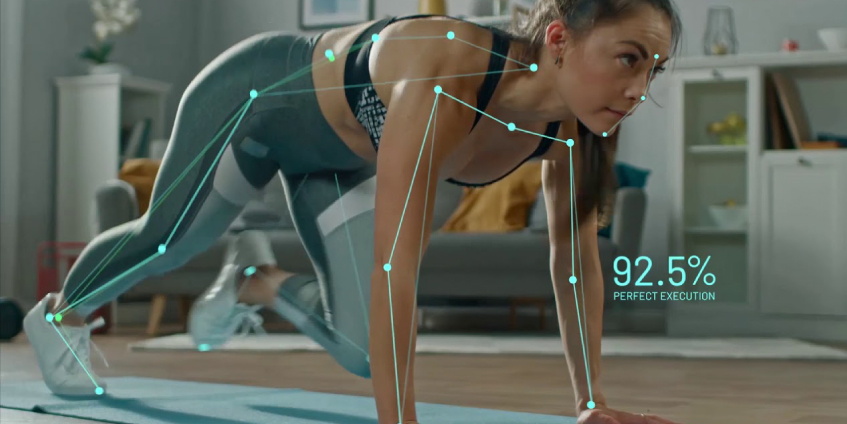

TinyPose; When your Machine becomes your Trainer

Image credit: Solution Analysts

This article will walk us through the path of developing an Edge Device-friendly Pose Estimation model for real-time applications such as fall detection, physiotherapy, workspace safety, games, occupancy controls, etc.

Galliot TinyPose is part of our work on Human Pose Estimation.

You can visit our Products Page for more info.

If you are building an AI app, you might find our article on Data Labeling Approaches useful.

1. From Face Mask Detection to Real-Time Pose Estimation

In the early days of the Covid-19 pandemic, our team decided to work on a real-time face mask detection application to help keep people in social environments safe and prevent the spread of the virus. To build such a model, we were required to detect the faces in each frame first. Then the model could indicate if there is a mask on each of the faces or not. One of the solutions we came up with was using a Pose Estimator to detect/locate people’s faces in an image and continue our work. Long story short, that is how we got involved in developing various Pose Estimation models for edge applications. Please take a look at our Edge Face Mask Detection for real-world applications article to read the details.

Previously we have optimized OpenPifPaf Pose Estimator to Deploy it on Jetson platforms and run the model on real-world CCTV data (read this article for more info). After that, we searched for other solutions for pose estimation to compare with OpenPifPaf and found Alpha Pose. Alpha Pose is an accurate multi-person pose estimator, the first open-source system that achieves 70+ mAP (72.3 mAP) on the COCO dataset and 80+ mAP (82.1 mAP) on the MPII dataset.

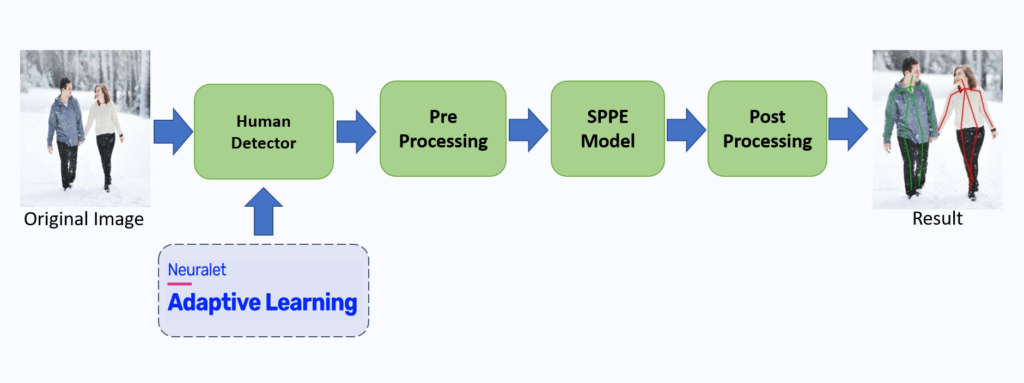

Alpha Pose caught our attention for two reasons: On one hand, it was relatively more accurate for our targeted applications (e.g., surveillance) compared to the OpenPifPaf pose estimator. On the other hand, It uses a Top-Down method with an object detector module, while the OpenPifPaf model is based on a Bottom-Up pipeline. Hence, since we found a significant correspondence between our Adaptive Learning object detection model and the Top-Down Pose Estimation pipelines, we were excited about merging them. Galliot Adaptive Learning is a solution for training personalized and efficient object detection models with accurate outputs that can adapt to new environments and unseen data without manual data annotation. Therefore, this system can be located as the human detector module in top-down pose estimator pipelines (figure1) and improve the estimator performance. (For more details, read the Adaptive Learning article or directly contact us for the API service.)

We believed that integrating these two solutions would allow us to easily build customizable pose estimators for different environments by manipulating the object detection module. Plus, considering Galliot’s goal for deploying deep learning models on various edge devices, we have decided to deploy the Alpha Pose model on our targeted Edge Devices.

In the following sections, we are going to talk about our journey to deploy the Alpha Pose on Edge Devices (NVIDIA Jetsons and Google Edge TPU), the problems, and our upcoming plans.

2. Model Conversion

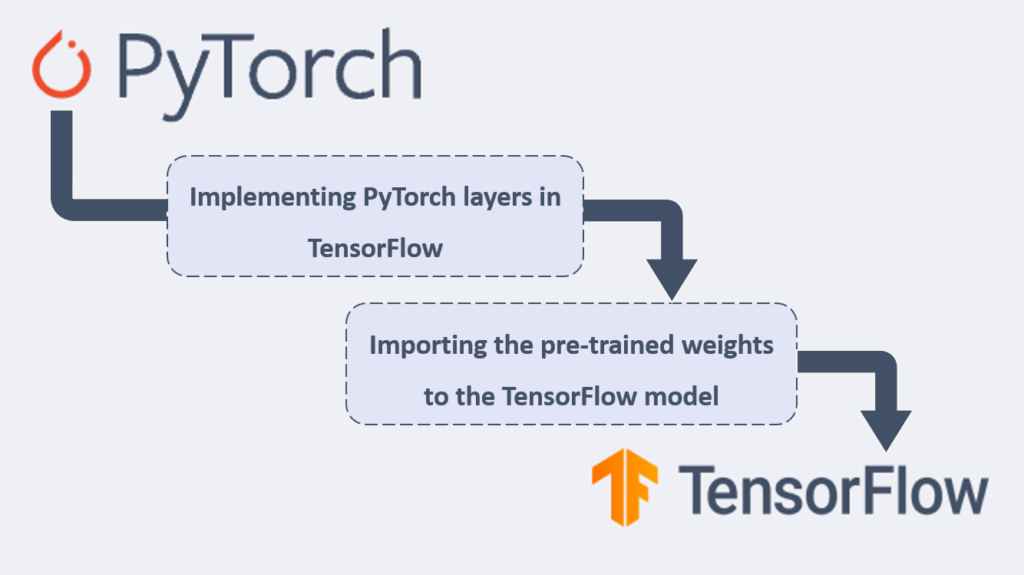

Alpha Pose repository contains various models for pose estimation. Among these models, we chose the Fast Pose to work with and optimize it for real-time applications. To deploy the model on different edge devices, such as Google Edge TPU and Jetson family devices, we had two challenges on our way. First, we had to change the model’s original architecture and implement it on the supported framework of the target edge device. Second, we required a solution to transfer the pre-trained model’s weights in our new architecture.

One of the supported edge devices by Galliot is Google Edge TPU, which only supports the TensorFlowLite format for running. However, the Fast Pose model was originally implemented on the PyTorch framework. Thus, we had to turn the model into a TensorFlow format to be able to deploy it on Edge TPU. To do so, we have first implemented all PyTorch modules of the Fast Pose model in TensorFlow. We then wrote the required codes for importing the model’s pre-trained weights from PyTorch to our new TensorFlow model. Details of the model conversion will be discussed in our future articles.

3. Deploying the Fast Pose model on Edge Devices

After turning the PyTorch model into the TensorFlow format, we tried deploying the model on Jetson and Edge TPU. We are now going to take a quick look at the processes in the following.

3.1. NVIDIA Jetson Platforms

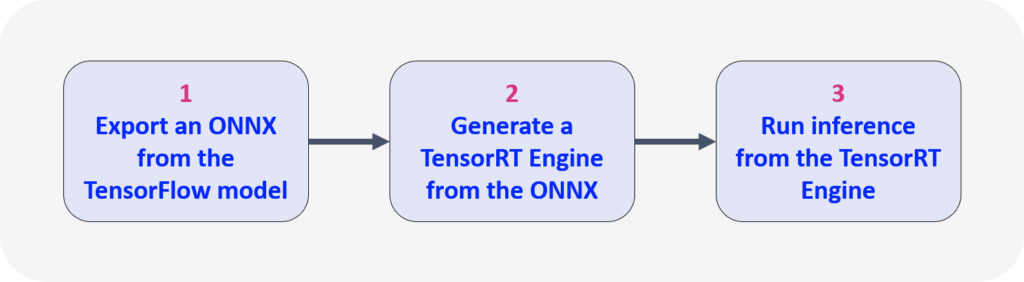

To run the Fast Pose model on the Jetson platforms, we have applied the following optimization steps to generate a TensorRT engine and run the model inference from it:

1. Export an ONNX from the TensorFlow model

2. Generate a TensorRT Engine from the ONNX model

3. Run inference from the TensorRT Engine

Note: since these steps are similar to our previous work on the OpenPifPaf model, you can read the details of each step in our “Pose Estimation on NVIDIA Jetson platforms using OpenPifPaf” article.

Model Performance and Optimization for Jetson Devices:

After turning the Fast Pose model into TensorRT and deploying it on Jetson Nano, we noticed that the end-to-end performance is slow and undesirable. Profiling the model revealed that the model is fast by itself, but some pre-processing and post-processing modules take too much time and slow down the process. After finding these bottlenecks, we optimized these modules mainly using vectorization methods and increased the performance up to 5 fps. We will go deep into the process and elaborate on our work in another article.

3.2. Google Edge TPU

To deploy the Fast Pose on Edge TPU, we generated a Quantized TFLite version of our new TensorFlow model. Later, we compiled the TFLite model on Edge TPU, which couldn’t reach a suitable performance, and was disappointingly slow (3-4 seconds per frame).

After scanning the model, we found a module called “Transposed Convolution,” which is not fit for edge devices. We made some changes to this module to be able to run the model on Edge TPU and improve its performance, although we couldn’t reach the proper speed/performance. At this point, we have accepted that the Fast Pose model and its structure can not be deployed on Edge TPU.

4. Coming Up Next

We have finally decided to write a training code and build a brand-new model named TinyPose. This way, we can customize the model and design a Device Aware architecture for it. We will use and organize our modules and other elements based on our End Devices and their capabilities.

The TinyPose model is currently under development by Galliot’s team and will be released soon. If you are interested in our work, you can subscribe to our feed and receive updates on this project. Feel free to contact us and share your thoughts or ask your questions about our Other Products. You can use the Contact form below or directly send your email to hello@galliot.us.

Coming Soon in Future Releases

Model Conversion; Details of how we imported the PyTorch model into the TensorFlow format.

TinyPose; The proprietary Edge-Device friendly Pose Estimation model by Galliot.

Get Started

Have a question? Send us a message and we will respond as soon as possible.

Leave us a comment